- From 2015-2020, 3 ADs (Air-worthyness Directive) were issued.

- 787 continiously powered for 248 days can lose all AC power due to GCUs (Generator Control Unit) going into fail-safe mode, the S/w counter is local to GCU.

- On ground, power cycling or reboot of main electrical power &/or to Contol Modules required.- All 3 Control Modules might reset together if powered for 22 days.

- Stale data monitoring function of Common Core system (CCS) may be lost if powered for 51 days, leading to loss of Common Data N/w (CDN) message age validation, combined with CDN switch failure. IDK what is this.

So, some kind of S/w glitches with every aircraft exist, hence upgrade is needed.

In 787 also they are there.

Some sound very specific & technical, difficult to understand even for techies in 1st glance. For example the last one above -

"Stale data monitoring function of Common Core system (CCS) may be lost if powered for 51 days, leading to loss of Common Data N/w (CDN) message age validation, combined with CDN switch failure."

I discussed in other forums that what has this to do with dual engine failure at same time & the response/outcome so far is that POTENTIALLY these stale/delayed control signals from sensors through network to FMC & then to MFDs can display data late which might induce psuedo-pilot error.

The stale data to engine EEC can also impact it.

But such a delay would be in 100s of mili-seconds & then corrected.

The error potential

ASSUMES SWITCH FAILURE ALSO, when H/w & S/w redundancies are expected.

An analysis by someone was shared -

(

https://www.ioactive.com/reverse-en...e-boeing-787-51-days-airworthiness-directive/)

I would divide this into 4 areas-

- H/w

- S/w

- communication

- clock & n/w nodes sync.

I'll mention some points for generic global audience & non-IT techies.

People with knowledge of OSI stack, N/w protocols, resource virtualization & partitioning, can understand these things more easily.

H/w -

- In general, a critical switch needs to be modular with at least 2 contoller modules & multiple port modules each with at laest 2 ports connected to both N/w A & B.

- Such switch also has temp sensors, smoke detectors.

- Cooling fan modular also redundant. If 1 fail, others spin faster, with alert.

- And if 1 entire switch fils then other N/w switch is supposed to route the data.

- So

there is protection against -

- port/cable failure,

- module failure,

- controller failure

- cooling fan failure

- entire switch failure

+ power redundancies i said earlier.

S/w ( messages, data-structure)-

In switches, routers in datacenter for example -

- There are multiple modules needing multiple types of

logs & look-up tables like MAC/CAM table, ARP table (Address Reolution Protocol) with IP address - MAC address mapping, then routing protocols like OSPF, EIGRP, etc have their routing tables & n/w topology table, etc, then link state table, etc.

- Some tables like topology are distributed across n/w whenever there is link state change.

- All these entries in tables have their own default age which can be tweaked if required.

- After age timer is zero the entries are deleted & when new PDU arrives the entries are again put in tables. But

this doesn't affect working of device & display to admin team.

- The switch/router does malfunction due to numerous H/w & S/w issues & for certain issues there are reboots done 1st at smallest modular level, if didn't work then intermediate section level, still didn't work then entire switch/router reboot. But AFAIK, a

S/w bug usually relates to OS, memory, not timestamps.

- If entire switch/router malfunctions, rarely, then link state changes, n/w topology changes, new master node might be needed by automatic election by exchanging some info. This can take few seconds, HOWEVER, some latest protocols either have an

'active-active' mirrored system of distributing work among 2 modules or 2 nodes,

OR a deputy node is selected proactively to take over instantly to minimize re-direction delay.

- L3 Routers provide redundancy by protocols like VRRP (Virtual Router Redundancy Protocol), GLBP (Gateway Load Balancing Protocol), HSRP (Hot Standby Routing Protocol), VPC (Virtual Port Channel), etc.

- L2 Ethernet switch provide redundancy by PRP (Parallel Redundancy Protocol), Port Channel, VPC, Cisco has CEF, etc.

Communication -

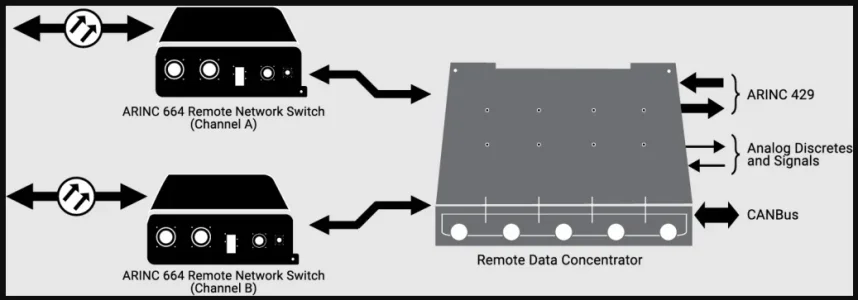

- There are 2 types of communications - Data & Control Signals.

- Data received by FMC would be from sensors, components & about control surface positions.

- Data sent by FMC would be instructions & new values to operate all moving components & to displays, logs.

- There is choice to send

Control Signals in dedictaed N/w separate from data channels, like CAN (Controller - Area Network) used by RDCs here in 787, this is called

"Out of Band communication".

- If Data & Control Signals are sent in

same channel then it is called "In Band Communication".

- Communication is made redundant with at least 2 channels/sub-networks.

- So the H/w redundancies mentioned above at

port & module level facilitate the redundancies.

- No matter which protocol is used - Ethernet, Fiber Channel, PPP, etc, these are reliable, connection-oriented protocols with CRC & other checks, ackowledgement of each transmitted PDU (Protocol Data Unit). The sequencing & flow control are inbuilt.

Clocking, Synchronization -

- All the functions & data structures are independent &

synchronized from battery powered clock in a network or computing node.

- For all network nodes to sync together, every transmission

protocol has 1st section called "

preamble", etc, of its PDU (Protocol Data Unit)

for sync, a pattern of 0/1 bits.

Ethernet has 7 bytes preamble.

- After initial sync, there will periodic sync too.

-

The log messages are oriented to GMT from which the display/analysis tools can calculate local time.

- How do 2 devices in 2 different time zones, or across the world interact through internet? Do they consider eachother's local time? NO. They use things like

NTP (N/w Time Protocol).

- There can be secondary server setting also if primary fails.

- If all NTP servers fail or link with them fail, then it doesn't destroy communications b/w nodes/devices.

- The

787 aircraft has local Time Manager like NTP server.

Hence as per my low IQ the

actual transmit timestamp could be issue if used wrongly in programming.

Every node has its own battery powered local clock, so after their max value, it is expected to reset.

If sender's clock reset 1st then receiver's & timestamp recieved is lower than receiver's then

a well-programmed logic can check last 1 or 2 values & confirm that this resetting is expected.

So whether the root cause is H/w malfunction or S/w bug, there is redundancy in network.

Same goes with a critical computer.

So at this point itself i would reject CDN switch failure unless a bad switch design/product for real-time application.

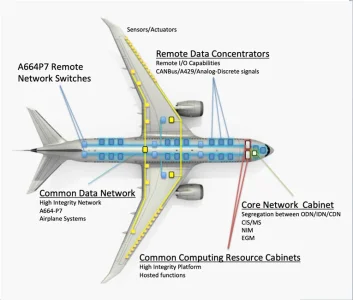

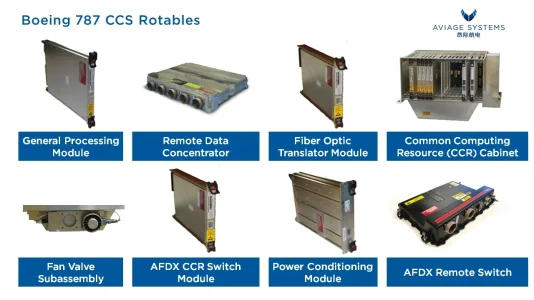

Now let's look at Boeing 787 H/w & S/w features:

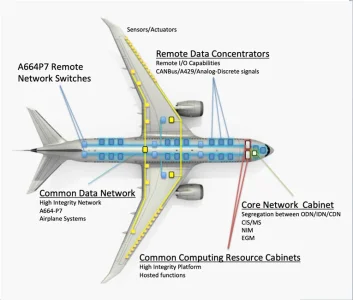

The 787's CCS (Common Core System) diagram,

there seems to be at least 6 CDN switches.

Bcoz this is small N/w so L3 router with IP not used, just L2 Ethernet will suffice.

We can see 2 parallel blue lines denoting

2 channels/sub-networks.

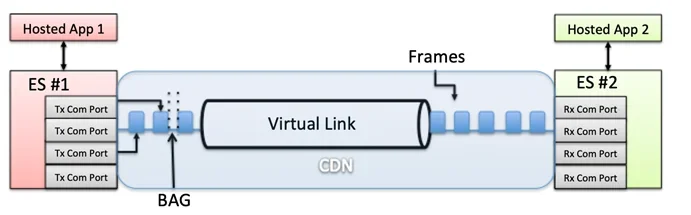

The avionics

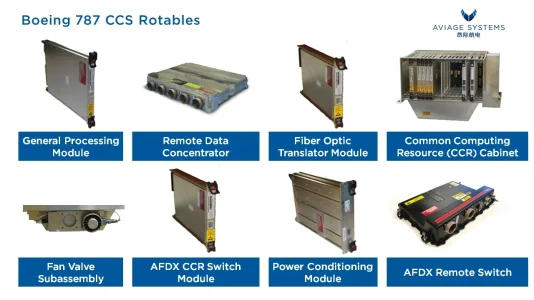

FMC H/w seems to be modular & redundant:

The 'End System' modules are connected via PCI bus in backplane & each ES LRU (Line replacable Unit) has -

- 1x ASIC (i hope multi-core)

- 2x transcievers

- 1x config memory

- 1x RAM

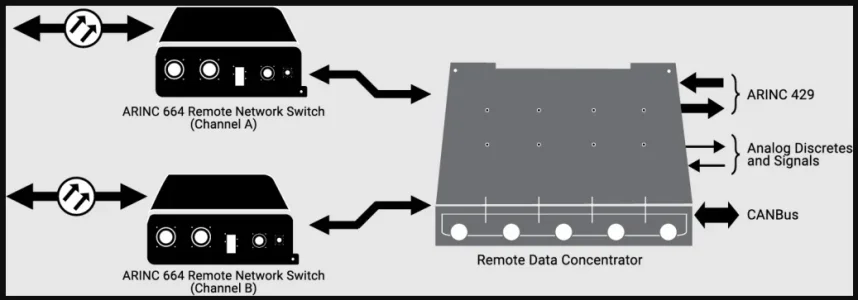

21 RDCs (Remote Data Concentrators) interface with components with other protocols, analog things, sensors, valves, pumps, etc.

They are

connected to 2 networks via CDN switches.

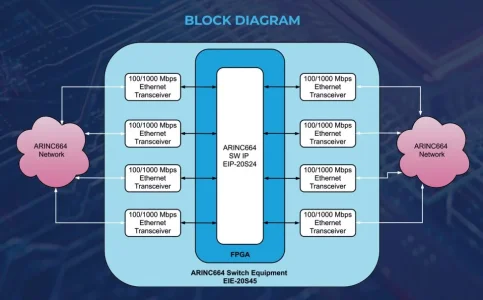

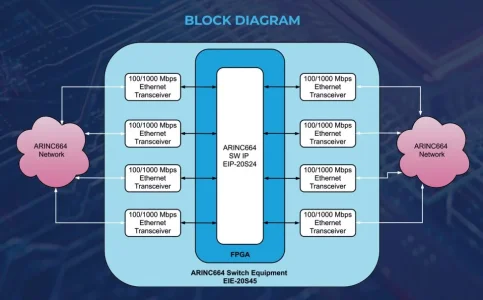

I don't have exact pic of 787 CDN switch. something like following -

So we see that there are

multiple ports, probably logically bundled in Ether-Channel port group giving redundancy, load balancing, high availability.

There are

batteries + RAT for backup.

Now few points about this avionics S/w, communication -

-

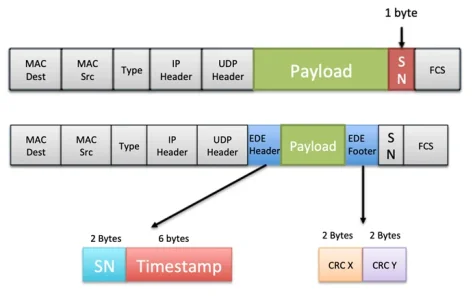

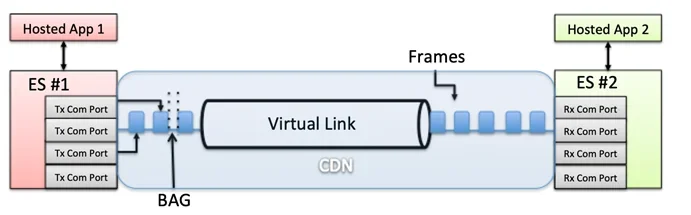

ARNIC 664 is Airbus version based on Ethernet, ATM (Async Transfer Mode), so it'll provide redundancy, speed, full-duplex, data integrity (CRC), etc.

- It seems to use Virtual Link ID instead of MAC address, hence a VL table instead of MAC/CAM table,

can reject any erroneous data transmission.

- Reading further, IP address is also used, but not in routing as this is small n/w.

- CCS is an asynchronous system, decoupling this operation from the network interface.

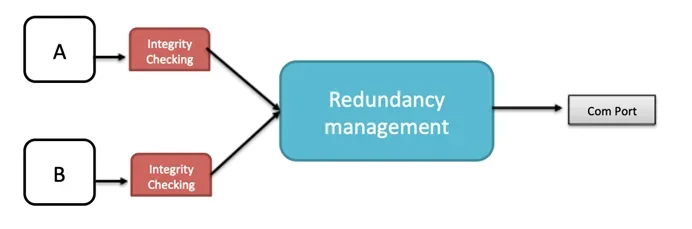

- Data is transmitted on

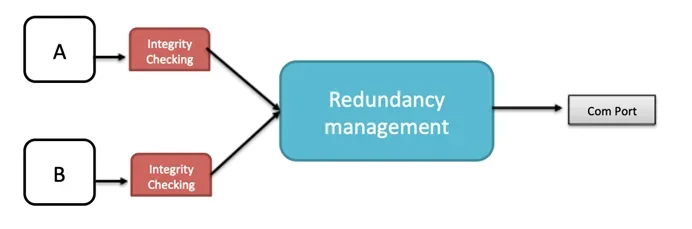

2 channels/networks. At recieving end there is

integity checking & redundancy management using '1st validation wins' policy.

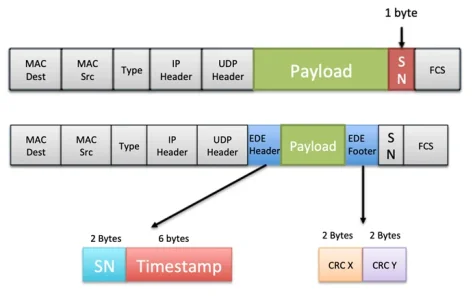

- But it seem to use

UDP primarily, not TCP. UDP is used where some data loss like in video, audio is acceptable, while

TCP has 'sequences' & 'acknowledgement' for ordered delivery, not UDP. Hence the

ES (End System) has to add 1 byte in data for Sequence # (0-255) at physical link level.

- But still again Boeing,

at virtual link level, adds extra EDE protocol with 1 more sequence #, on top that the actual time stamp & 2 CRCs. May be extra sequence is required to differentiate virtual link frames, but exact time stamp IDK why, may be for logs. But

more encapsulation, decapsulation, calculation means more complexity & delays.

- Timestamp is of transmit time &

uses local clock.

- As all nodes use their own local clock, CCS needs to

centralize all local time for age validation, done by the Time Management function, which maintains

table of relative offsets with each ES in CDN. Time Agent of each ES is periodically questioned by the Time Managers.

- Offset table is broadcasted to each ES to perform age verification of PDUs from another ES.

Ultimately the

consistency of broadcasted offset tables is being questioned in the analysis.

Possible reasons given -

-

ES didn't get off settable. [This is

not possible in redundant n/w, active-active or active-passive]

- Age in table > max config age, so discarded, or age is

inconsistent. [This means

corrupt data but

with CRC X + CRC Y + FCS + 2 channels + redundancy manager, how is this possible?]

To this response was that

cosmic rays can still corrupt data after all error checks.

Then

every phase is at risk - transmission source, transit carrier, destination.

And every electronic system, small/big, consumer goods or industrial, civil or military is at risk, especially space objects like satellites, ISS, etc.

(

https://en.wikipedia.org/wiki/Cosmic_ray#Effect_on_electronics)

But there are radiation hardening methods also, physical & logical (electronic), when we have high altitude & space machines since decades now.

(

https://en.wikipedia.org/wiki/Radiation_hardening)

Also, our lovely atmosphere filters most radiation. The remaining effects reduce towards surface.

I leave upon audience to share their knowledge if they know of 100% shielding or space solution used in jets, etc.